Life after Win XP: another approach - Part 3

In part 2 of this series I provided several options for organisations wanting to update their current Windows XP environment to a free and open source (FOSS) environment rather than a more recent Microsoft platform when Microsoft officially abandons its venerable Windows XP in April 2014.

On to the next challenge: once you've decided your organisation can be productive with a FOSS environment, you have to work out how to set up and maintain it.

Enterprise FOSS

Many people think that Microsoft invented the idea of "enterprise computing": large deployments of computers managed in bulk by a small number of system administrators on behalf of users in an organisation. That credit should go to UNIX, a family of proprietary enterprise computer systems which predate Microsoft's rise by at least a decade, and managed to do their job with an elegant simplicity never matched by Microsoft's lower cost and now pervasive offerings. Today Microsoft rules the enterprise desktop and UNIX is an old school relic, largely forgotten by all but most venerable IT practitioners.

Linux, the most widely used FOSS operating system, was built on the same design philosophy as UNIX, and has inherited many of UNIX's strengths like modularity, simplicity, and stability, but none of its high costs. The network models I describe below all assume a network of desktops built around one or more Linux servers. These models are not exclusive; they can be mixed and matched to suit your requirements.

Network Storage and Identity Management

The traditional organisational network consisting of individual desktop computers using centralised authentication to manage access and centralised network storage for important data, is easy to set up in a FOSS world.

User management and authentication are generally achieved via either a system called Samba (more about that next week) or OpenLDAP where LDAP is an open standard - Microsoft Active Directory is a set of tools and user interfaces built around around their own LDAP implementation.

Network file storage is typically handled by the NFS or by Samba as well.

In this scenario, users log in with their user name and password on any desktop, and get their own desktop and files, and access to resources like printers, scanners, and email systems. All important data, both organisational and personal, is stored on the server, meaning only one system needs to be backed up. This solution is functionally equivalent in most ways to a "Domain" in the MS world, but in my experience is far less complex.

This is the most flexible and highest performance model from the users' point of view, but requires that you maintain a large number of relatively powerful (and expensive) desktop computers . They boot from local hard disks, subject to hardware failure and need to be kept up-to-date. Because they focus resources at the desktop level, they're sometimes referred to as "fat" clients.

Network Booting

To reduce your maintenance requirements, you can instead configure your desktops to boot from boot-images stored on your server rather than local hard disks. I call these "chubby clients" because they can be diskless, depending on centralised functionality on the network, but still running applications locally.

This approach simplifies configuration - upgrading the central boot-image means each computer will be "upgraded" the next time it boots. Similarly, you can ensure a uniform set of application is available to each user - none of the user's "personality" is stored on a specific machine. A faulty desktop can simply be swapped out for another one, and a user can immediately be productive in their environment again. The only potential downside is that these desktops are dependent on a functioning network.

Thin Clients

If you want to minimise maintenance requirements, you can go one step further towards centralisation by turning some desktops into "thin clients" which are a window into the capabilities of your central server. Linux supports thin clients out-of-the-box via LTSP.

With today's typically fast networks, these solutions are low cost, low maintenance and offer superb performance - all schools and many businesses should be looking at them. A single commodity Linux server, comparably in price to a high-end desktop, can happily support dozens of thin clients where each consists of very low cost desktop terminal hardware (for example), a screen, keyboard, and mouse. Nearly all of the actual processing takes place on the server, so the thin client's on-board specifications can be very modest. Focus your investment on the graphics hardware and monitors instead.

FOSS Desktops

For those who haven't had the pleasure of seeing them in action, I've include some examples of four widely used FOSS desktops, in their default configurations:

Cinnamon

/

/

The General Image Manipulation Program (GIMP) and LibreCalc Spreadsheet on the Cinnamon desktop.

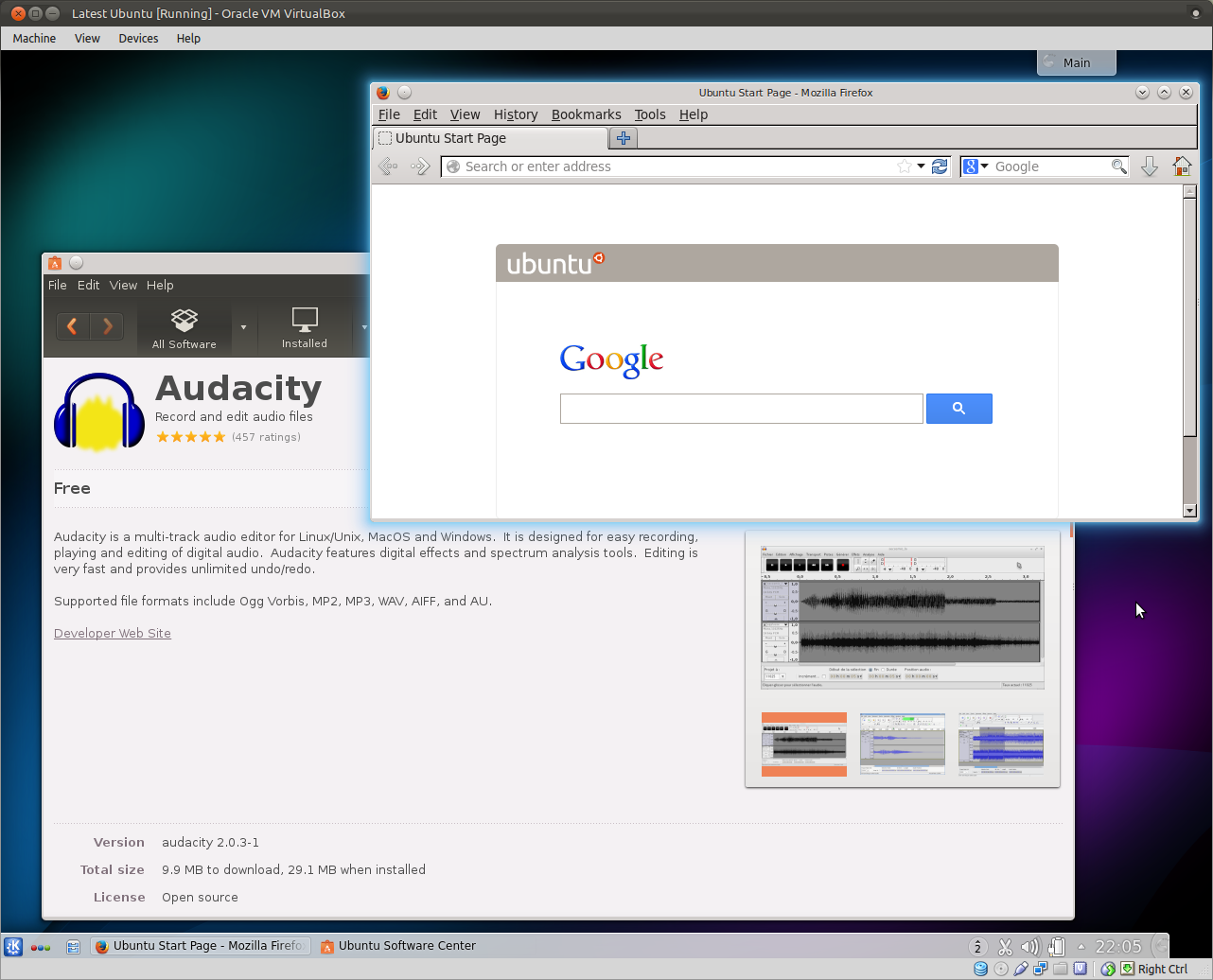

The K Desktop Environment, aka KDE

/

/

Firefox and the Ubuntu Software Centre on the K Desktop Environment.

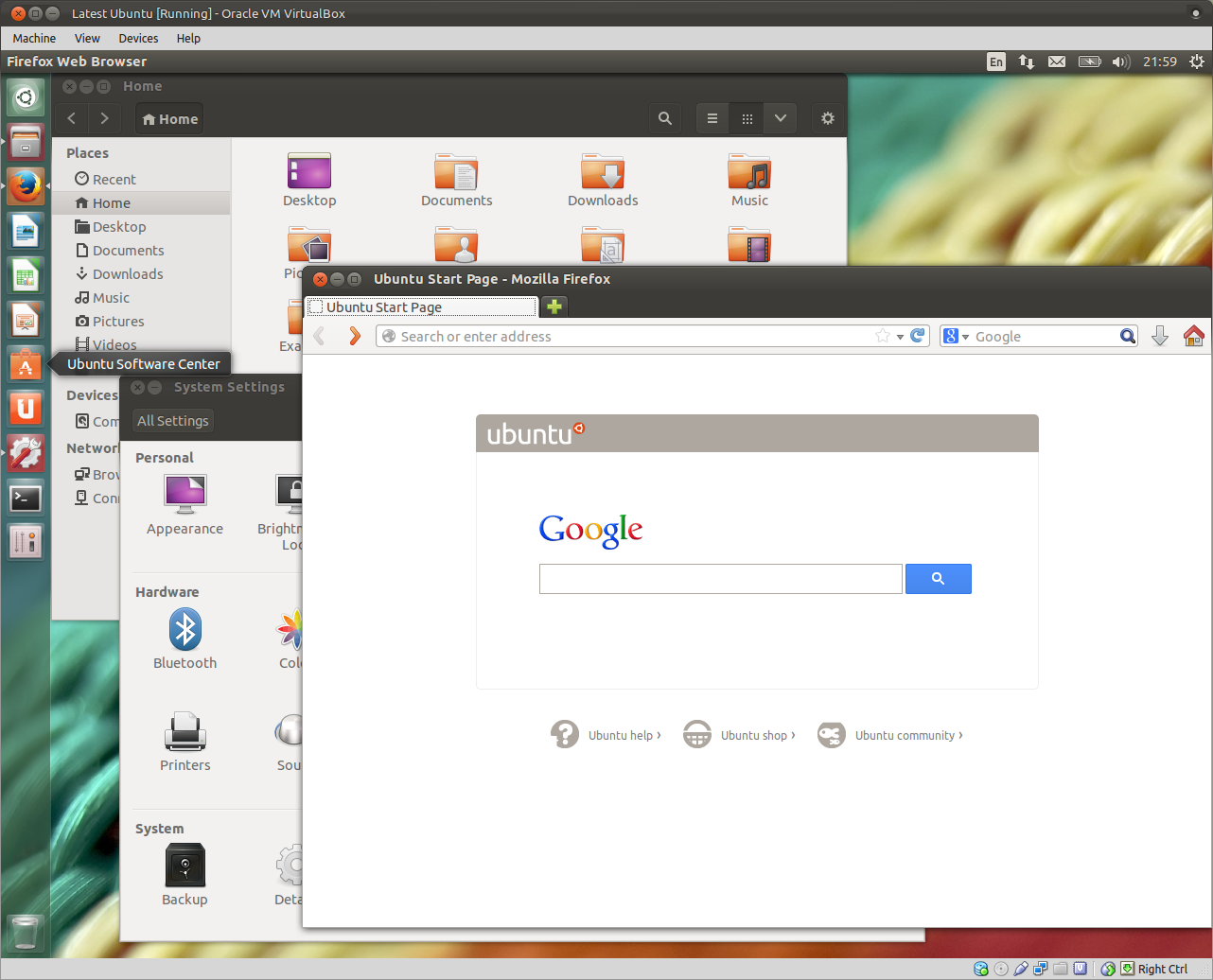

Unity

/

/

Firefox, System Settings, and the Nautilus filemanager Unity desktop.

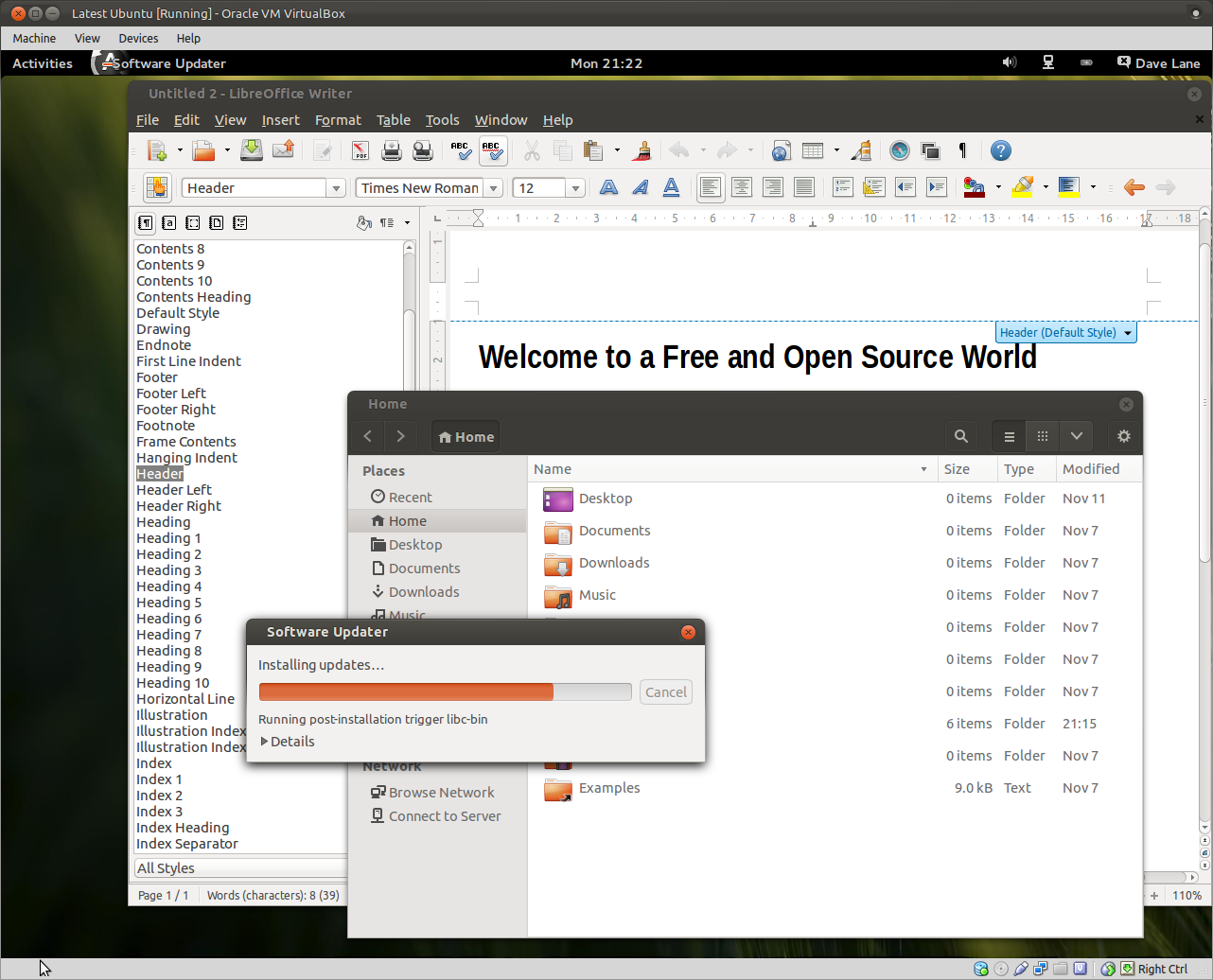

The Gnome Desktop

/

/

LibreWriter, Nautilus filemanager, and software updates Gnome 3 desktop.

Next Week...

Next week in the final instalment of the series, I'll talk more about something at which FOSS excels: accommodating diversity. I'll talk about BYOD, geographically distributed organisations, and how businesses adopting FOSS can both retain control of their own computing destiny and gain the benefits of "the Cloud"... by creating their own.

An edited version of this article also features on ComputerWorld NZ.

About the author: Dave Lane is a long-time FOSS exponent and developer. An ex-CRI research scientist and long-time FOSS development business owner he does software and business development and project management for FOSS development firm Catalyst IT. He volunteers with the NZ Open Source Society, currently in the role of president.

/

/

This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 Unported License.